What is Context Engineering? It's better than Prompt Engineering in Real-World AI Systems

Context Engineering is what every AI engineer and builder needs to know. Building systems that provide the right context. Prompt Engineering supports context engineering.

There’s a new buzzword in the AI world: Context Engineering.

I first saw it when Andrej Karpathy retweeted a post by Tobi Lütke, CEO of Shopify, stating that “context engineering” is a better term than “prompt engineering.” That caught my eye. A lot of things fly around on Twitter (or X or whatever we’re calling it now), but this one stuck with me.

In this post, I’ll break down:

What exactly is context engineering?

How is it different from prompt engineering?

Why does it matter right now, more than ever?

And what can you do to start thinking like a context architect, not just a prompt tinkerer?

What Is Context Engineering?

Let’s make it simple.

If prompt engineering is about writing good instructions, context engineering is about giving the AI everything it needs to actually do the job.

Large Language Models (LLMs) like GPT-4, Claude, or Gemini can generate incredible output — but only when they have the right context. That context isn’t just your prompt. It’s everything the model knows or can access in that moment: documents, tools, APIs, previous conversations, your preferences, current data, and more.

Context Engineering is the practice of designing systems that supply the AI with the right information at the right time. It’s about making sure the AI has what it needs beyond the prompt, so it can solve real problems.

Who Coined the Term “Context Engineering”?

The phrase “context engineering” was coined by Walden Yan, co-founder of Cognition, the company that created Devin — the AI software engineer that made headlines in 2024.

In a blog post, Yan explained the shift:

“Prompt engineering was coined as a term for the effort needed to write your task in the ideal format for an LLM chatbot. Context engineering is the next level of this. It is about doing this automatically in a dynamic system.” — Walden Yan, Cognition

That one sentence sums it up: prompt engineering is manual. Context engineering is systemic.

What Industry Leaders Are Saying About It

The term didn’t take long to get picked up by some of the most respected voices in AI.

🧠 Andrej Karpathy (@karpathy), former Tesla and OpenAI:

“+1 for ‘context engineering’ over ‘prompt engineering’… it better captures what matters in production-grade systems.”

🏢 Tobi Lütke, CEO of Shopify:

“I really like the term ‘context engineering’ over prompt engineering. It describes the core skill better: the art of providing all the context for the task to be plausibly solvable by the LLM.”

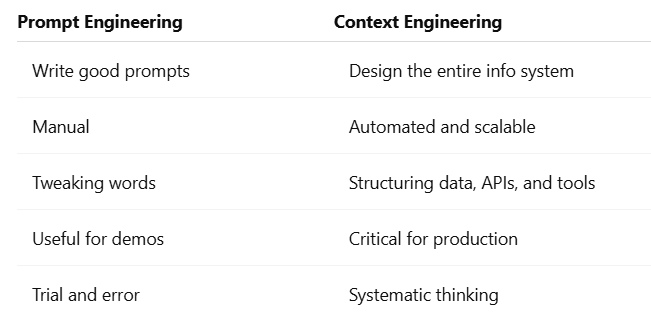

Context Engineering vs Prompt Engineering: What’s the Difference?

Think of prompt engineering as writing a to-do list for someone.

Context engineering is giving them the tools, access, and data they need to actually do the job.

Why Prompt Engineering isn’t Enough Anymore

Most people still think AI magic happens because someone wrote the perfect prompt.

But here’s what really happens in production systems:

👉 Your one-line prompt is just the starting point.

👉 The AI pulls from 500+ other sources — previous chats, internal docs, APIs, dashboards, tools, logs, and real-time feeds.

That means your prompt is only 0.1% of what the AI actually processes.

The real job now?

Feed the AI the 99.9% it actually needs.

And that’s what context engineering is about.

How Do You Engineer Context?

Here are 4 ways you can build context-aware AI systems today:

1. RAG Systems (Retrieval-Augmented Generation)

Let your AI pull from:

Internal docs

Knowledge bases

Product catalogs

SOPs

Meeting notes

2. Memory Management

AI needs to remember:

Previous chats

User preferences

Recent actions

What worked before

3. Tool and API Orchestration

Let the AI call the tools it needs:

Trigger dashboards

Run calculations

Connect to CRM or CMS

4. Real-Time Feeds and Multi-Modal Context

Pull in:

Market data

IoT info

Images

CSVs

Dashboards

All this makes the AI smarter and more reliable.

Why This Matters Now

If you’re building AI products, experimenting with tools, or even just trying to figure out why your LLM isn’t doing what you want…

Stop thinking, “how do I write a better prompt?”

Start thinking, “what context does my AI need to succeed?”

Because here’s the truth:

Two people can use the same model. One gets magic. One gets garbage.

The only difference is the context.

Final Thoughts: Build Systems, Not Just Prompts

Prompt engineering helped us get started.

But now, AI is infrastructure. And if you’re building anything serious — a chatbot, a personal agent, a business tool — you have to think in systems.

That’s what Context Engineering is all about.

Don’t just write better prompts.

Build smarter systems.

Feed the AI what it needs — not just what fits in one input box.

In the words of Ethan Mollick, professor at Wharton:

“It’s not just about crafting a useful LLM input — it’s about encoding how your company works.”

And that’s the skill that will separate the hobbyists from the real builders.

Read my previous articles: