ChatGPT 5 : Game-Changer or Just Overhyped Noise?

ChatGPT 5 review: $1.25/M tokens pricing, 4.8% hallucination rate, 74.9% SWE-bench score. Is it worth switching from Claude? Real testing results inside.

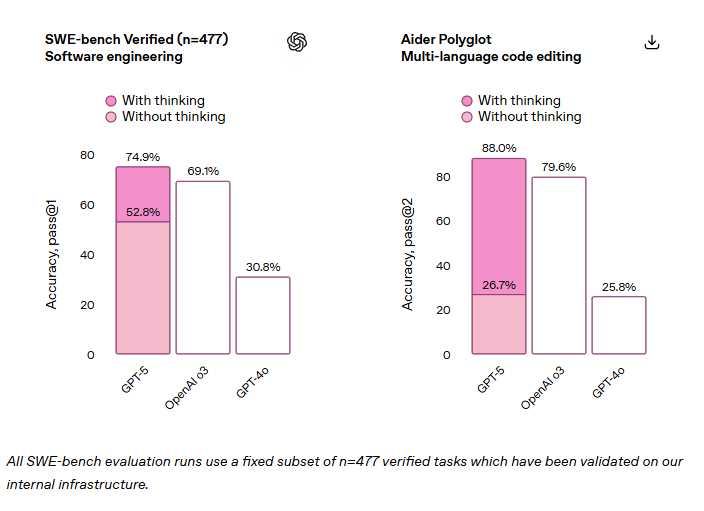

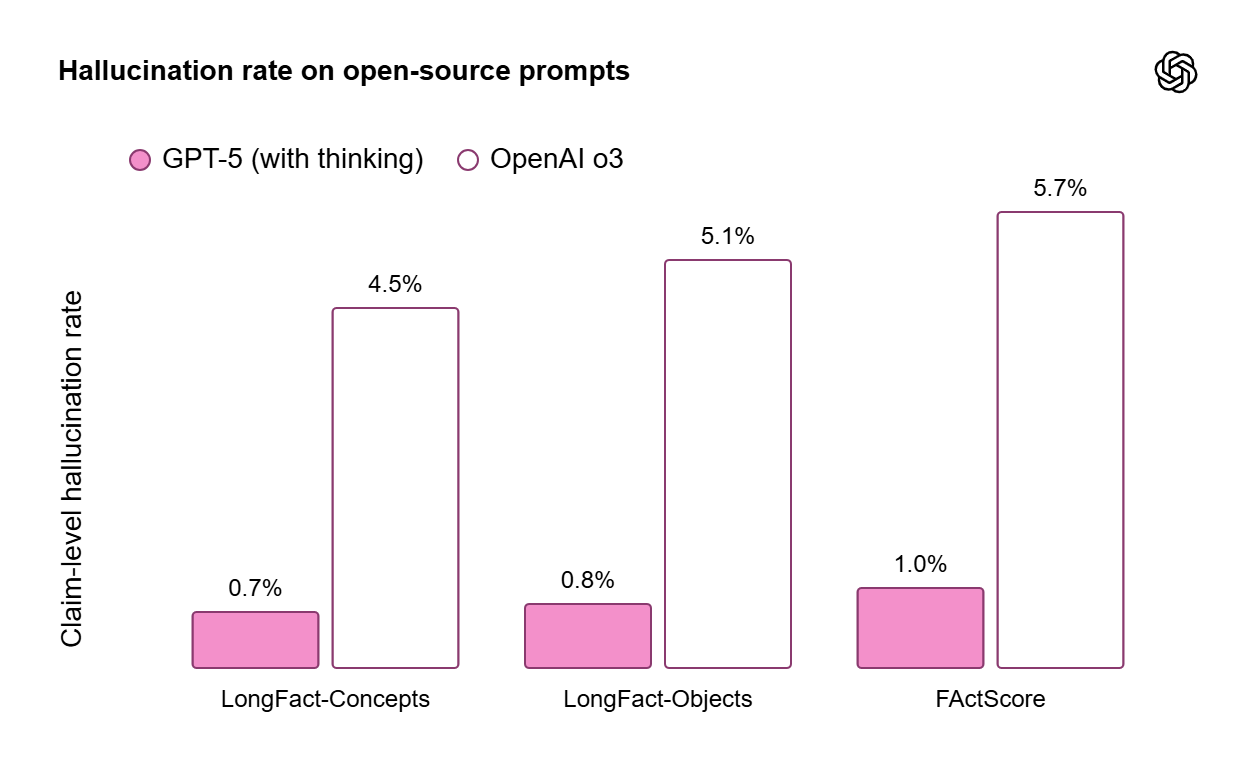

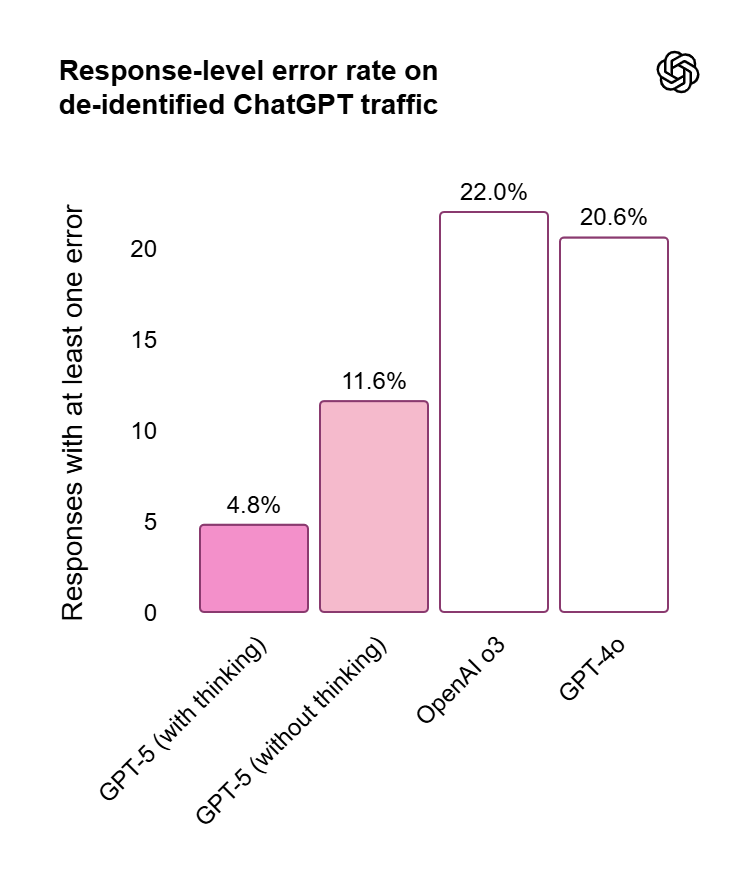

On August 7, 2025, OpenAI dropped ChatGPT 5 with the kind of fanfare usually reserved for iPhone launches. Sam Altman teased it as our next step toward AGI. Early access influencers called it "one of the best models ever." The benchmarks looked incredible—74.9% on SWE-bench, 4.8% hallucination rates, PhD-level reasoning.

But here's what happened when the hype hit reality: OpenAI yanked GPT-4o access to force users onto the new model. The community revolted. Reddit threads titled "GPT-5 is horrible" hit 4,600 upvotes. Developers started switching back to Claude.

I've been testing ChatGPT 5 across multiple platforms — ChatGPT.com, Cursor, Lovable — and honestly? It's complicated. This isn't the AGI breakthrough we were promised, but it's not the complete disaster angry users claim, either.

First, let’s understand what GPT 5 is all about.

No Time to Read? Here's the Scoop

✅ Hallucination rates plummeted: 4.8% vs GPT-4o's 20.6% - huge win for accuracy

✅ Debugging is legit: Fixed my UI bugs faster than Claude in specific cases

✅ Aggressively cheap pricing: $1.25/$10 per million tokens undercuts most competitors

✅ Mini/Nano variants: $0.25/$2 and $0.05/$0.40 make high-volume use viable

❌ Creative tasks feel flat: Generic, soulless outputs that scream "AI-generated"

❌ Restrictive limits: 200 messages/week on Thinking mode feels stingy

❌ OpenAI killed GPT-4o access: Community backlash was swift and justified

Bottom line: Specialised tool with aggressive pricing, not the revolution. Great for debugging and cost-sensitive work, meh for creativity.

What’s so special about GPT 5?

One unified system

Combines a fast, efficient model for most questions with a deeper "GPT‑5 thinking" model for complex tasks.

Real-Time Router: Instantly chooses the right model based on conversation type, complexity, tool needs, or your explicit requests (e.g., “think hard about this”).

Continuous Learning: The router improves over time using real signals—such as when users swap models, rate responses, and measured correctness.

Smart Limits: When usage limits are hit, smaller versions of each model handle queries so service continues.

Future Vision: Plans are underway to merge these capabilities into a single, even more efficient model.

The Marketing Machine vs Reality

What OpenAI Promised

"Next step toward AGI" - Sam Altman's exact words

Dynamic thinking: Integrated router that decides how much reasoning to apply

Benchmark domination: 74.9% SWE-bench, 89.4% GPQA Diamond, 4.8% hallucination rate

Human-expert matching on complex tasks like legal analysis

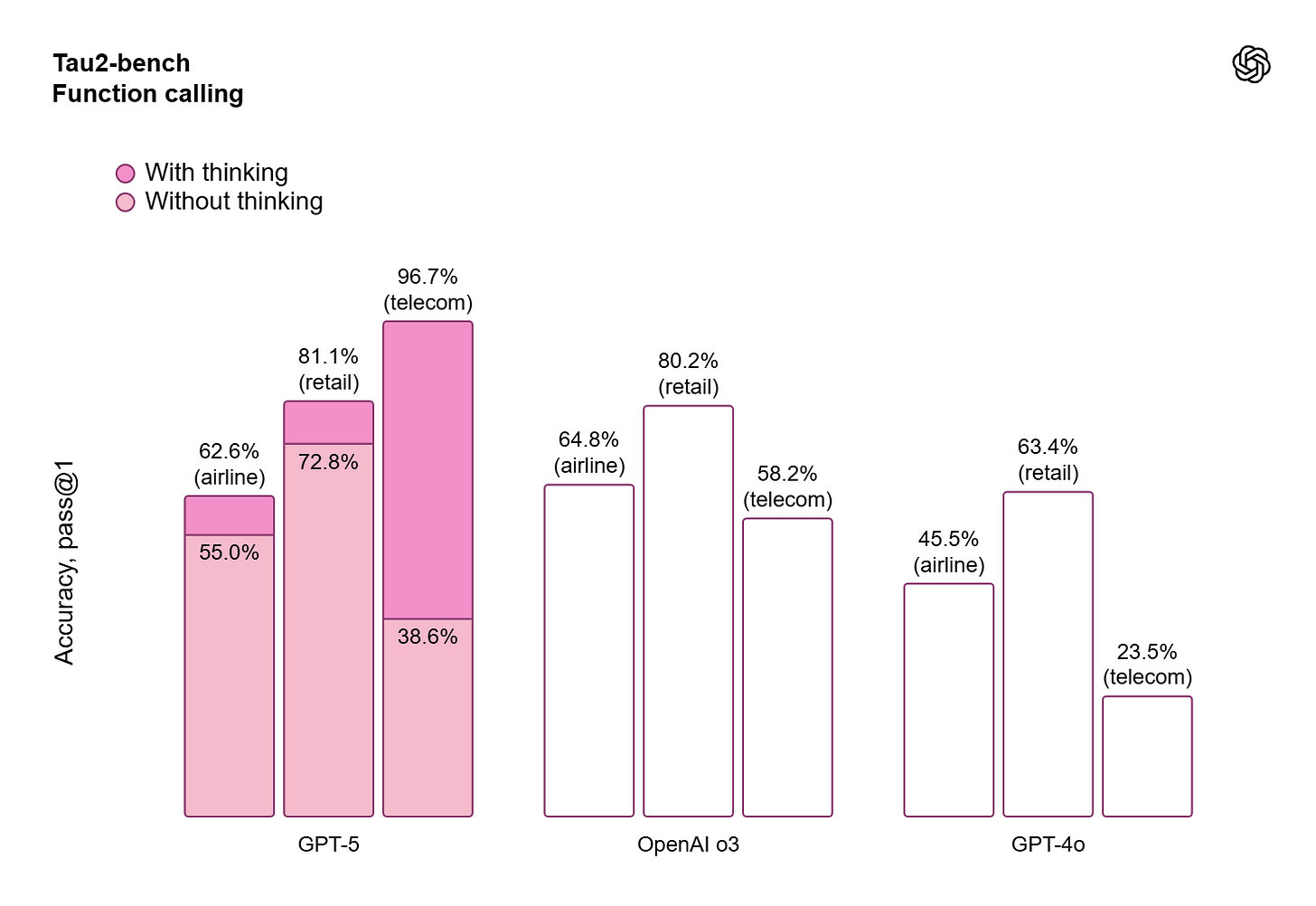

Great performance in Tool Calling and Function Calling

What Users Actually Got

Forced migration from beloved GPT-4o

Message limits that feel like downgrades (200/week for Thinking mode)

Personality-free responses that lack the creative spark of 4o

Mixed performance that's great for some tasks, terrible for others

The disconnect is jarring. When you hype something as AGI-adjacent, then slap restrictive limits on it.

My Real-World Testing: The Good, Bad, and Ugly

🎯 Where It Actually Shines

Debugging Victory: I was stuck on a UI bug in HelloAryan (my AI-powered resume creator) that Claude couldn't solve. ChatGPT 5 nailed it in two tries with precise, working fixes. That's not hype - that's measurable value.

UI Design Surprises: On Lovable, I prompted it to design a counselor marketplace. The result? Actually impressive - clean, professional, didn't look like typical AI slop. When the platform and model align, magic happens.

Accuracy Wins: That 4.8% hallucination rate isn't marketing fluff. For fact-checking and technical documentation, it's noticeably more reliable than GPT-4o's 20.6%.

❌ Where It Falls Flat

Creative Desert: Building a landing page directly on ChatGPT.com produced bland, cookie-cutter designs that screamed "made by AI." Zero personality, zero spark.

The Personality Problem: Community complaints about "lobotomized" responses are valid. Compared to GPT-4o's conversational warmth, ChatGPT 5 feels clinically sterile.

Platform Dependency: Performance varies wildly depending on where you use it. Great in Cursor for debugging, mediocre standalone.

Check out the design below given by ChatGPT on their platform, where I have given a completely detailed prompt, but the output has been pretty sub-par.

Community Backlash: The Numbers Don't Lie

The internet's verdict is messy but revealing:

📉 Reddit Reality Check

r/ChatGPT thread: "GPT-5 is horrible" - 4,600 upvotes, 1,700 comments

Top complaints: Loss of GPT-4o, restrictive limits, "no soul" in responses

User quote: "Short replies, obnoxious AI-stylized talking, no soul."

🐦 X (Twitter) Split Decision

The Critics:

@AIRevolutionX: "OpenAI killed GPT-4o access to push GPT-5? Typical Sam Altman hype move."

@TechBit: "It's useless for creative stuff like writing a short story—way too stiff"

The Defenders:

@chatgpt21: "The fact that they slashed 4-5x on the hallucination end is astonishing"

@DevGuru99: "GPT-5's coding is legit—built a small app in one go"

The pattern is clear: technical tasks = wins, creative tasks = fails.

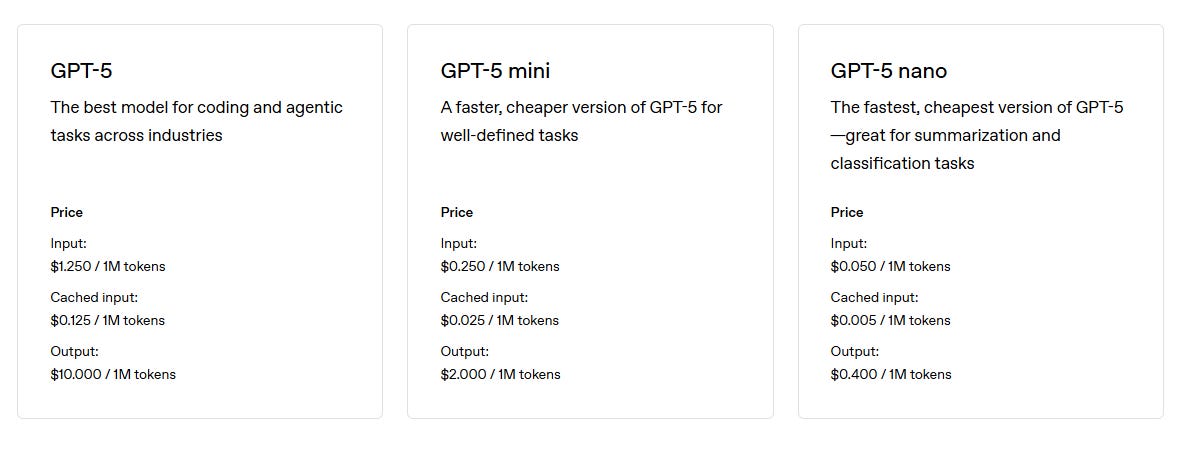

The Pricing Revolution: OpenAI Gets Aggressive

Here's where OpenAI made their smartest move - brutally competitive pricing that undercuts almost everyone:

💰 The New Cost Structure

GPT-5: $1.25 input / $10 output per million tokens

GPT-5 Mini: $0.25 input / $2.00 output (80% cheaper than full GPT-5)

GPT-5 Nano: $0.05 input / $0.40 output (insanely cheap for high-volume)

📊 Competitive Context

Claude Opus 4.1: $15 input / $75 output (10x more expensive than GPT-5)

Gemini 2.5 Pro: $1.25 input / $10 output (matches GPT-5 exactly)

GPT-4o: $2.50 input / $10 output (GPT-5 is 50% cheaper on input)

This changes everything. OpenAI isn't just competing on performance - they're starting a price war.

The Mini and Nano Game-Changers

OpenAI's smartest move wasn't ChatGPT 5 itself - it was the Mini and Nano variants that make advanced AI genuinely accessible:

🎯 Sweet Spot Discovery

Mini hits the perfect balance - solid reasoning at $0.25/$2.00 pricing. Community feedback: "o1-level reasoning on a budget" and "great for coding and math without breaking the bank."

Nano enables entirely new use cases. At $0.05/$0.40 per million tokens, you can build high-volume applications that were economically impossible before.

💡 Real-World Impact

Startups: Can now afford to integrate advanced AI without venture backing

Enterprise: High-volume customer service becomes viable

Developers: Prototyping and testing costs drop to nearly zero

When to Use What: The Practical Guide

Based on extensive community testing and my own experiments:

✅ Choose ChatGPT 5 (or Mini/Nano) for:

Cost-sensitive projects - pricing advantage is massive

Debugging complex code - Can be a good use case here

High-accuracy factual work - with 4.8% hallucination rate

High-volume applications - Nano pricing makes micro-interactions viable

Frontend UI tasks - especially when paired with platforms like Lovable

🎯 Choose Claude Opus 4.1 ( Best for Coding and Reasoning) for:

Creative writing and personality - still the king of engaging content

Complex implementation tasks - better at full-stack development despite 74.9% vs 74.5% tie on SWE-bench

When budget isn't constrained - premium pricing ($15/$75) for premium results

Conversational warmth - GPT-5's clinical tone isn't for everyone

🔥 Hybrid Approach (Most Popular)

Smart developers are doing this: ChatGPT 5 for planning and debugging + Claude for implementation and creativity.

One Reddit user nailed it: "ChatGPT 5 for ideation and planning + Claude Code for implementation - it's a thing of beauty."

Bottom Line: Revolution or Evolution?

ChatGPT 5 isn't the AGI breakthrough Sam Altman hyped, but it's also not the disaster angry Reddit threads suggest. It's a specialized tool with game-changing economics.

🎯 The Real Value Proposition

For developers: Excellent debugging + affordable API costs

For businesses: Superior accuracy at competitive pricing

For high-volume applications: Nano pricing enables new business models

For creators: Probably still stick with Claude for personality

🔮 What This Means for AI Builders

The trend is clear: no single model dominates everything, but economics now matter more than ever. GPT-5's aggressive pricing forces competitors to justify premium costs with superior performance.

The market is evolving from "one AI to rule them all" to "right tool at the right price." ChatGPT 5's mixed reception but strong pricing accelerates this trend.

My take? OpenAI under-delivered on the revolutionary angle but over-delivered on economics. The pricing strategy is brilliant - making advanced AI accessible while forcing premium competitors to prove their worth.

The Mini and Nano variants are the real stars here, democratizing access to powerful AI at previously impossible price points. That's not sexy, but it's revolutionary for anyone building AI products.

What's your experience been? Are you team ChatGPT 5 for the economics, staying loyal to Claude for quality, or building hybrid workflows? The AI landscape just got more price-competitive, and that benefits everyone except shareholders of premium AI companies.